Over the past few years I’ve been developing research, which I collectively refer to as ‘real-time phylogenomics’ – and this is the name of our mini-site for MinION-based rapid identification-by-sequencing. Since our paper on this will hopefully be published soon, it’s probably worth defining what I hope this term denotes now, what it does not – and ultimately where I hope this research is going.

‘Phylogenomics’ is simple enough, and Jonathan Eisen at UC Davis has been a fantastic advocate of the concept. Essentially, phylogenomics is scaled-up molecular systematics, with datasets (usually derived from a genome annotation and/or transcriptome) comprising many coding loci rather than a few genes. ‘Many’ in this case usually means hundreds, or thousands, so we’re typically looking at primarily nuclear genes, although organelles’ genomes may often be incorporated, since they’re usually far easier to reliably assemble and annotate. The aim is, basically to average phylogenetic signal over many loci by combining gene trees (or an analogous approach) to try and obtain phylogenies with higher confidence (single- or few-locus approaches, including barcodes no matter how judiciously chosen, capable of producing incorrect trees with high confidence). The process is intensive, since genomes must be sequenced and then assembled to a sufficient standard to be reasonably certain of identifying orthologous loci. This isn’t the only use of the term (which also refers to phylogenies produced from whole-genome metagenomics) but the most straightforward and common one as far as eukaryote genomics is concerned, and certainly the one uppermost in my mind.

However the results are often confusing, or at least more complex than we might hope: instead of a single phylogeny with high support from all loci, and robust to the model used, we often find a high proportion of gene trees (10-30%, perhaps) agree with each other, but not the modal (most common, e.g. majority rule consensus) tree topology. For instance among 2, 326 loci in our 2013 paper on phylogenomics of the major bat families, we found that position of a particular group of echolocators – which had been hotly debated for decades, based on morphological and single-locus approaches – showed such a pattern (sometimes supporting the traditional grouping of Microchiroptera + Megachiroptera, but over 60% of loci supporting the newer Yangochiroptera + Yinpterochiroptera system. This can be for a variety of reasons, some biological and some methodological. The point is that we have a sufficiently detailed picture to let us chose between competing phylogenetic hypothesis with both statistical confidence and intuition based on comparison.

These techniques have been on the horizon for a while (certainly since at least 2000) and gathered pace over the last decade with improvements in computing, informatics, and especially next-generation sequencing. The other half of this equation, ‘real-time’ sequencing, has emerged much more recently and centres, obviously, on the MinION sequencer. Most work using this so far has focused either on the very impressive potential long-read data offers for genomic analyses, particularly assembly, or rapid ID of samples e.g. the Quick/Loman Zika and Ebola monitoring studies; and our own work.

So what, exactly, do we hope to achieve with phylogenomic-type analyses using real-time MinION data, and why?

Well, firstly, our work so far has shown that the existing pipeline (sample -> transport -> sequence-> assemble genome-> annotate genes-> align loci-> build trees) has lots of room for speedups, and we’re fairly confident that the inevitable tradeoff with accuracy when you omit or simplify certain steps (laboratory-standard sequencing, assembly) is at least compensated for by the volume of data alone. Recall that a ‘normal’ phylogenomic tree similar to our bat one might take two or more postdocs/students a year to generate from biological samples, often longer. A process taking a week instead would let you generate something like 50 more analyses in a year! The most obvious application for this is just accelerating existing research, but the potential for transforming fieldwork and citizen science is considerable. This is because you can build trees that inform species relationships, even if the species in question isn’t known. In other words a phylogenome can both reliably identify an unknown sample, and also identify if it is a new species.

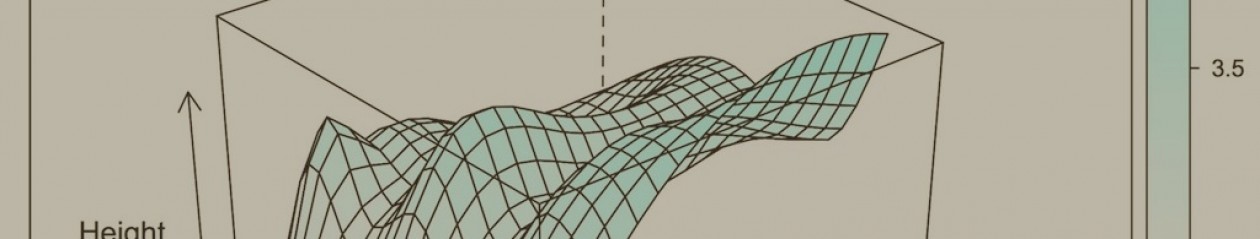

More excitingly, I think we need to have a deeper look at how we both construct and analyse evolutionary models. Life on earth can be accurately and fully described best by a network, not a bifurcating tree, but this applies to loci as well as single genes. In other words, there is a single network that connects every locus in every living thing. Phylogenetic trees are only a bifurcating projection of this, while single- or multi-locus networks only comprise a part.

We’ve hitherto ignored this fact, largely because (a) trees are often a good approximation, especially in the case of eukaryote nuclear genes, and (b) the data and computation requirements a ‘network-of-life’ analysis implies are formidable. However, cracks are beginning to appear, in both faces. Firstly, many loci are subject to real biological phenomena (horizontal gene transfer, selection leading to adaptive convergence, etc) which give erroneous trees as discussed above. Meanwhile prokaryotic and viral inference is rarely even this straightforward. Secondly, expanding computing power, algorithmic complexity, and sequencing capacity (imagine just 1,000 high schools across the world, regularly using a MinION for class projects…) mean the question for us today really isn’t ‘how do we get data’, but ‘how ambitious do we want to be with it?’

Since my PhD but especially since 2012, I’ve been developing this theme. Ultimately I think the answer lies in the continuous analysis of public phylogenomic data. Imagine multiple distributed but linked analyses, continuously running to infer parts of the network of life, updating their model asynchronously both as new data flood in, and by exchanging information with each other. This is really what we mean by real-time phylogenomics – nothing less than a complete Network of Life, living in the cloud, publicly available and collaboratively and continuously inferred from real-time sequence data.

So… that’s what I plan to spend the 2020s doing, anyway.